- Shop

- GPU cards & Edge AI

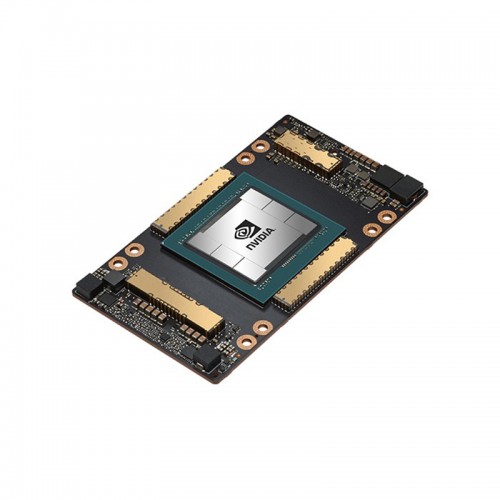

- NVIDIA A100 (Customized)

-

Overview

The NVIDIA A100 (Customized) is a high-performance data center GPU designed for AI, machine learning, deep learning, HPC (High-Performance Computing), and large-scale analytics. This customized version provides enhanced performance and memory configurations to meet specific enterprise and research requirements.

The A100 GPU delivers unmatched acceleration for compute-intensive workloads, enabling faster AI model training, high-throughput inference, and large-scale simulations. Its flexible architecture allows for multi-GPU scaling with NVLink, making it suitable for cloud, on-premises, and hybrid deployments.

Key Features

- NVIDIA Ampere architecture with advanced CUDA, Tensor, and RT cores

- Large memory capacity with HBM2 or customized memory options for handling massive datasets

- Multi-GPU support with NVLink for scalable high-performance compute clusters

- Enterprise-grade reliability with ECC memory and robust thermal design

- Optimized for AI training, inference, HPC, and data analytics workloads

- Supports virtualization for multi-user GPU workloads

- PCIe 4.0 or NVLink interconnects for high-speed data throughput

- Enhanced performance for deep learning frameworks, scientific computing, and professional software

- Customizable specifications to meet enterprise or research requirements

Specifications

Specification

Detail

GPU Architecture

NVIDIA Ampere

CUDA Cores

6,912–7,680 (depending on customized configuration)

Tensor Cores

432 (3rd generation)

Memory

40–80 GB HBM2 or customized options

Memory Bandwidth

1.6–2.0 TB/s depending on configuration

NVLink Support

Yes, for multi-GPU scaling

PCI Express

PCIe 4.0 x16 or customized interconnect

Form Factor

Dual-slot, full-height, customized for server compatibility

TDP (Thermal Design Power)

250–400 W depending on configuration

Cooling

Active cooling with server-optimized thermal design

Operating Temperature

0 °C to 50 °C

Use Cases / Workload Fit

AI/ML training, HPC simulations, deep learning inference, data analytics, multi-GPU clusters

Certifications

CE, FCC, RoHS

Warranty / Support Options

Standard NVIDIA warranty; optional enterprise support and service contracts available