- Shop

- GPU cards & Edge AI

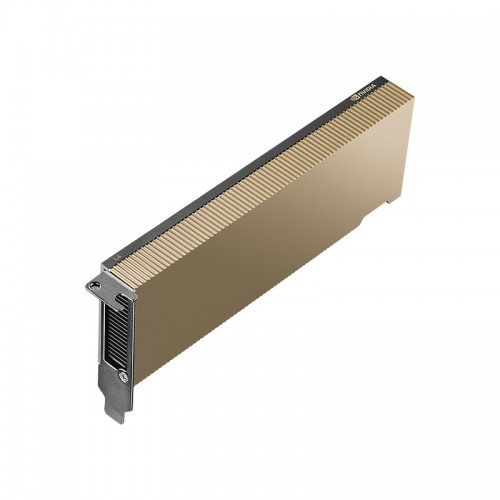

- NVIDIA L4 24GB

-

Overview

The NVIDIA L4 24GB is a versatile server GPU designed for AI inference, deep learning, virtual desktop infrastructure (VDI), and professional visualization. With 24GB of high-speed memory, it delivers efficient performance for a wide range of workloads including machine learning models, multimedia processing, and real-time graphics rendering.

Optimized for data center deployment, the L4 24GB ensures energy-efficient, high-performance computing for enterprise applications, cloud services, and AI-driven workloads.

Key Features

- 24GB GPU memory for medium to large-scale AI and visualization workloads

- NVIDIA architecture optimized for AI, machine learning, and graphics tasks

- PCIe interface for easy server integration

- Supports CUDA, Tensor Cores, and RT Cores for AI, deep learning, and ray tracing

- ECC memory support for high reliability in enterprise environments

- Energy-efficient and compact form factor suitable for 24/7 operation

- Ideal for AI inference, VDI, rendering, and professional visualization

Specifications

Specification

Detail

GPU Architecture

NVIDIA Ampere / L-series architecture

CUDA Cores

High-performance cores for parallel computing

Tensor Cores

Optimized for AI and deep learning

RT Cores

Supports real-time ray tracing workloads

Memory

24 GB

Memory Type

High-bandwidth GDDR6

Memory Interface

192-bit or higher

Memory Bandwidth

Optimized for AI and visualization tasks

PCI Express

PCIe Gen4 compatible

Form Factor

Server / workstation optimized

Cooling

Enterprise-grade cooling solution

Use Cases / Workload Fit

AI inference, deep learning, VDI, professional visualization, multimedia processing

Certifications

CE, FCC, RoHS

Warranty / Support Options

Standard NVIDIA warranty; optional enterprise support available