- Shop

- Servers & Compute Solutions

- AI server

Supermicro SYS-521GE-TNRT With Nvidia H200 NVL 141GB

- Description

- Specification

- Summary

- Part List

- Key Features

- Advantages

- Why Should You Choose This Server Right Now?

-

Description

Supermicro SYS-521GE-TNRT With Nvidia H200 NVL 141GB -

Specification

Supermicro SYS-521GE-TNRT With Nvidia H200 NVL 141GBGeneral Specification

-

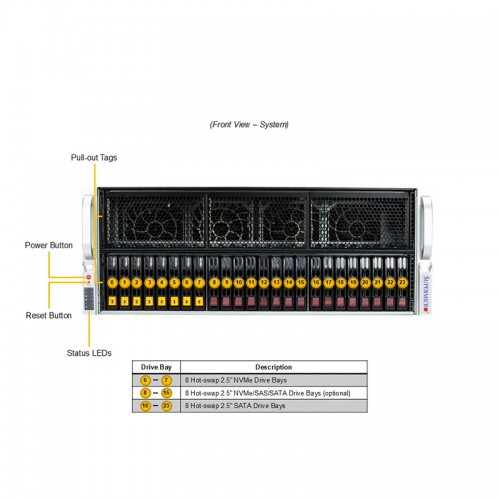

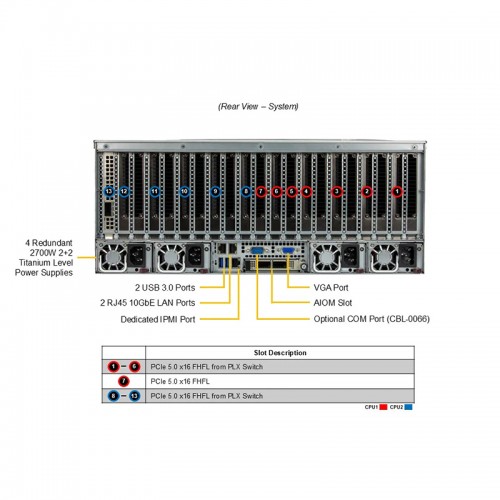

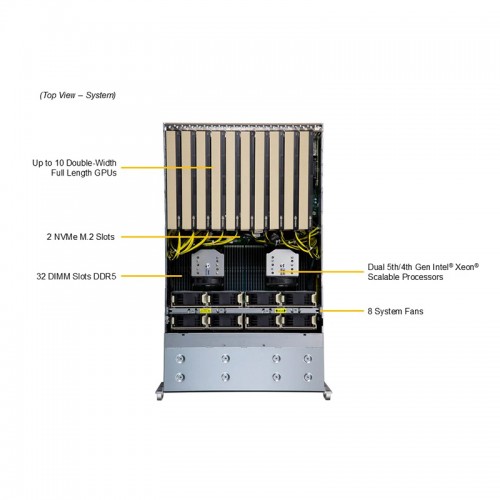

The Supermicro SYS-521GE-TNRT is a 5U GPU-optimized server built for extreme-scale AI, machine learning, HPC, and data-intensive workloads. Powered by dual Intel Xeon Platinum 8480+ (Sapphire Rapids) processors with a total of 112 cores, up to 2TB of DDR5 ECC memory, 8x NVIDIA H200 NVL GPUs (141GB each), and high-bandwidth networking (up to 200Gb HDR & 100GbE), this server is designed to deliver breakthrough performance for the most demanding enterprise AI and scientific computing tasks.

Its Dual Root Complex architecture ensures balanced PCIe Gen5 bandwidth between CPUs and GPUs, enabling optimized GPU communication and scaling across large parallel workloads.

-

Component

Description

Qty

Chassis

SYS-521GE-TNRT 5U Gen5 Dual Root GPU Server

1

CPU

2x Intel Xeon Platinum 8480+ (56C, 2.0GHz, 350W, 105MB Cache)

2

Memory

32x 64GB DDR5-4800 ECC Registered DIMMs (Total: 2TB)

32

Boot Drives

2x Samsung PM9A3 1.9TB M.2 NVMe PCIe Gen4 (SED)

2

Storage Drives

8x 3.8TB NVMe U.2 SSDs (7450 PRO PCIe 4.0, 1DWPD)

8

GPU

8x NVIDIA H200 NVL 141GB PCIe Gen5 x16

8

Networking 1

2-port 100GbE QSFP28 Mellanox ConnectX-6 DX

1

Networking 2

2-port 200Gb HDR QSFP56 Mellanox ConnectX-6 VPI

2

Security

TPM 2.0 Hardware Encryption Module

1

-

112 Cores with dual Xeon 8480+ CPUs for high-performance multithreaded compute.

✅ 2TB DDR5 ECC Memory for massive in-memory data handling and model training.

✅ 8x NVIDIA H200 NVL GPUs (141GB each) for advanced AI training/inference on Gen5 PCIe.

✅2 or 4-way NVIDIA NVLink bridge 900GB/s per GPU PCIe Gen5: 128GB/s

✅ 8x NVMe U.2 SSDs + dual M.2 OS drives – blazing-fast storage with enterprise endurance.

✅ Dual 100GbE + Dual 200Gb HDR connectivity for scalable cluster performance.

✅ PCIe Gen5 Architecture throughout – unlocking next-gen throughput & GPU scaling.

✅ TPM 2.0 for enterprise-grade security, firmware protection & secure boot.

✅ Dual Root Complex enabling parallel GPU operations with maximum PCIe efficiency.

Key Applications

- Large Language Model (LLM) Training

- LLM inference/fine-tuning at scale

- Big Data Analytics / Real-Time Decision Making

- Scientific simulations and HPC workloads

- Enterprise AI deployments (vision, speech, multimodal)

-

Maximum Compute Density

- 2× Intel Xeon 8480+ = 112 total threads and 210MB cache – suitable for any CPU-intensive workload including AI pre-processing, simulation, and orchestration.

? State-of-the-Art AI Acceleration

- 8× NVIDIA H200 NVL 141GB – Next-generation GPU for deep learning, optimized for large transformer models, LLMs, and generative AI pipelines with HBM3e memory and Gen5 PCIe bandwidth.

? High-Capacity, High-Speed Memory

- Up to 2TB of DDR5 ECC Registered RAM at 4800MT/s ensures low latency and fast data access for in-memory compute tasks.

? Enterprise NVMe Storage

- Total of over 33TB NVMe SSD capacity (7450 PRO), ideal for AI datasets, caching, and high-throughput I/O pipelines.

? Extreme Connectivity

- Dual 100GbE + Dual 200Gb HDR for real-time data movement, clustering, or multi-node GPU workloads with minimum latency.

? Security & Reliability

- Built-in TPM 2.0, Secure Boot, encrypted SSDs, and ECC memory make this server highly secure and resilient.

-

Outrun your competition in AI training and inferencing – thanks to the H200 NVL GPUs and full PCIe Gen5 bandwidth.

✅ Future-proof your infrastructure – Intel Sapphire Rapids, DDR5, Gen5 PCIe, and advanced networking mean you won’t need upgrades for years.

✅ Enterprise-ready design – hot-swappable NVMe, TPM 2.0, full remote management, and redundant networking options.

✅ Efficient 5U Form Factor – delivers massive GPU power without compromising cooling or serviceability.

✅ Designed for the AI era – perfectly suited for organizations investing in LLMs and generative AI.

Related Products