- Shop

- Servers & Compute Solutions

- AI server

SYS-821GE-TNHR With NVIDIA H200 141GB

- Description

- Specification

- Summary

- Part List

- Key Features

- Advantages

- 5Why Should You Choose This Server Right Now?

-

Description

SYS-821GE-TNHR With NVIDIA H200 141GB -

Specification

SYS-821GE-TNHR With NVIDIA H200 141GBGeneral Specification

-

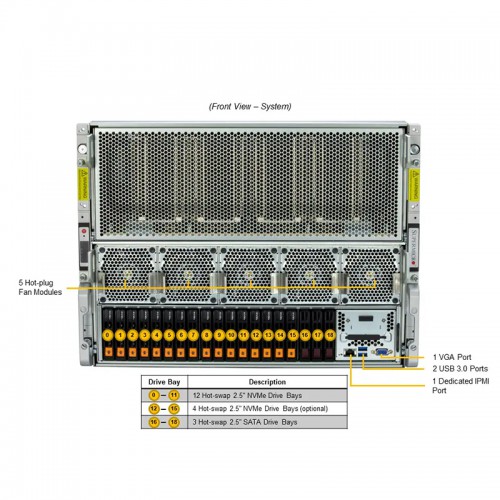

The Supermicro SYS-821GE-TNHR is an ultra-powerful 8U GPU server designed for the next generation of AI/ML training, high-performance computing, and data center-scale inferencing.

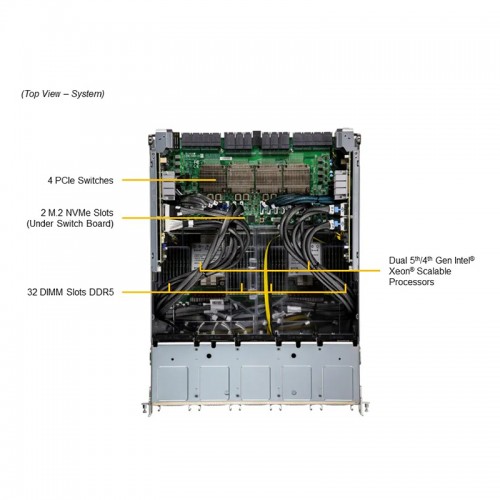

At its core, it leverages the HGX H200 baseboard equipped with 8x NVIDIA H200 141GB GPUs, dual Intel Xeon 8468 (Sapphire Rapids) CPUs (96 cores total), 2TB DDR5 ECC memory, and ultra-fast Gen4 NVMe storage — all built to meet the computational demands of large AI models and LLM workloads.

The system also features multi-400Gb/s networking, support for GenZ, PCIe Gen5, and top-level data center management tools. -

Component

Description

Qty

Chassis

SYS-821GE-TNHR – 8U GPU Server with Rear I/O

1

GPU

HGX Baseboard with 8x NVIDIA H200 141GB

1

CPU

2x Intel Xeon Platinum 8468 (48 cores, 2.1GHz, 350W)

2

Memory

32x 64GB DDR5-4800 ECC REG (Total: 2TB)

32

NVMe Storage

8x 3.8TB + 2x 960GB 7500 PRO NVMe U.2 SSDs

10

Networking

8x 400GbE OSFP (CX7) + 2x 200Gb HDR (CX6 VPI)

10

Management

Supermicro DataCenter Management Suite

1

Security

TPM 2.0 Hardware Security Module

1

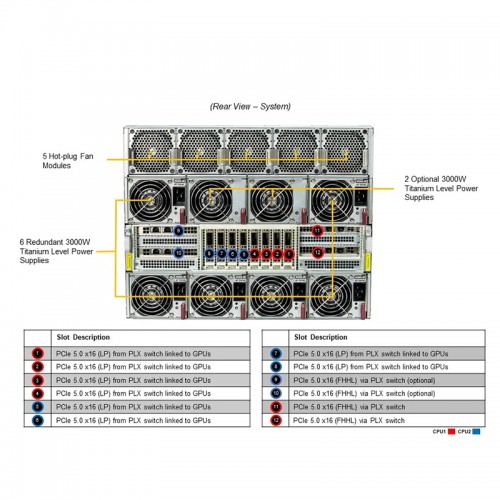

PSU

2x 3000W Titanium Efficiency Power Supplies

2

-

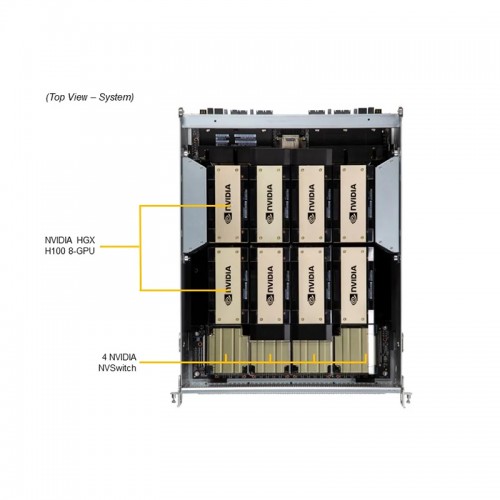

8x NVIDIA H200 (141GB) – massive memory for LLMs, generative AI, and GPU-accelerated workloads

✅ 96-core Sapphire Rapids CPU power – for preprocessing, scheduling, and orchestration

✅ 2TB DDR5 ECC RAM – ultra-fast bandwidth and stability for in-memory computing

✅ 10x Gen4 NVMe SSDs – fast, reliable storage ideal for real-time analytics & training datasets

✅ 400GbE & 200Gb HDR network – perfect for distributed training and scale-out AI clusters

✅ PCIe Gen5 infrastructure – full bandwidth to every GPU and high-speed IO

✅ TPM 2.0 & Secure Boot – enterprise-grade security, firmware integrity

✅ Rear I/O Design – optimized airflow and easier cable management in dense data centers

✅ Supermicro DCM Software – full-stack server monitoring and lifecycle management

Key Applications

- LLM Training & Inferencing (GPT, BERT, LLaMA, Claude, Gemini)

- Multi-node Distributed Deep Learning (PyTorch, TensorFlow, JAX)

- Scientific Computing & HPC (CFD, Genomics, Quantum Simulation)

- Media, Rendering & Video AI Workflows

- Data Center AI Infrastructure & Cloud GPU Services

- Medical Imaging & Diagnostics

- Digital Twin / Simulation / Modeling

-

NVIDIA HGX H200 – Extreme AI Performance

- 8x H200 with HBM3 memory offer >1.1TB total GPU memory — ideal for processing trillion-parameter models, massive embedding tables, or high-resolution visual tasks.

? CPU Efficiency & Thread Density

- Dual Intel Xeon 8468 with 96 cores total and 105MB L3 cache = optimized for orchestration, dataset preprocessing, and heavy multitasking across threads.

? Memory Bandwidth & Capacity

- 2TB DDR5 RAM (4800MT/s) ensures no bottleneck in data feeding GPUs or storing model checkpoints.

? Enterprise-Ready Storage

- 10x U.2 NVMe SSDs using 7500 PRO technology — high endurance (1DWPD), high capacity (~35TB), and PCIe 4.0 throughput.

? Next-Gen Networking

- 8x 400GbE ports (NVIDIA CX7) + 2x 200Gb HDR (Mellanox CX6) = excellent fit for distributed compute, storage disaggregation, and GPU Direct RDMA.

? Power & Thermal Efficiency

- 2x 3000W Titanium efficiency PSUs, rear I/O design, and optimized airflow = reliable, sustainable deployment for high-density GPU computing.

-

One of the most powerful AI servers on the market, combining H200 GPUs, Sapphire Rapids CPUs, and ultra-fast networking in one cohesive 8U platform.

✅ Purpose-built for generative AI and LLMs – ready for current and future models, with no need for near-term upgrades.

✅ Best-in-class GPU density in a single-node solution – 8x H200s with full bandwidth via HGX architecture and PCIe Gen5.

✅ Unmatched I/O scalability – including 400GbE, GenZ, Gen5 MCIO, and dual-layer NIC riser design for flexibility.

✅ Proven enterprise security and reliability – with TPM 2.0, redundant power, ECC memory, and Supermicro support.

✅ Optimized for modern data centers – fully compatible with GPU clustering, AIaaS platforms, and containerized ML pipelines.